NOTONO is an open-source web interface for assisted sound design using inpainting, a general framework where users interactively transform media by performing smart, local edits using Machine Learning models.

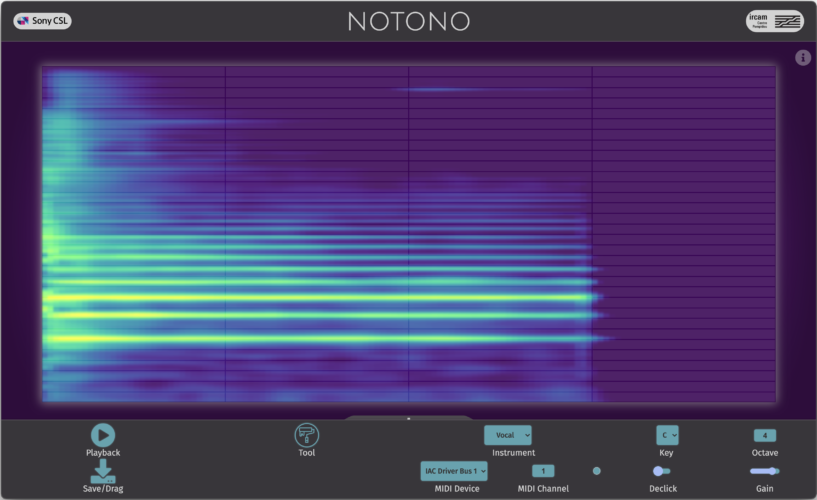

More precisely, NOTONO helps you generate and transform instrument sounds through paint-like operations. In an approach radically different to traditional synthesizers, with sometimes dozens of different knobs and faders, our interface lets you visualize the sound in time and frequency and simply select zones to regenerate: paint some guitar at the beginning for a more stringy attack, or maybe some organ in the lower frequencies for some of that analog “oompf”, or even paint some bright trumpet textures in the mid and high frequencies at the end of your sound. Then hook up a MIDI keyboard to your computer and use the resulting sound like a synthesizer to play chords and melodies. You can even keep editing the sound or let it morph on its own as you’re playing for unexpected transitions!

Last but not least: through the use of open-source web technologies (such as the Web Audio API) and standard communication protocols (MIDI, Rest APIs for interaction with the Machine Learning backends), this interface is open to hacking and customization, making it a great fit for makers