Diff-A-Riff: Our new Musical Accompaniment Co-creation Model

In modern music production, artists often build tracks incrementally, layering sounds and instruments to create a cohesive and enjoyable piece of music. The process usually starts with a blank canvas, as producers stack sounds and apply effects, progressively constructing the song’s structure and feel. Over the past 50 years, Digital Audio Workstations (DAWs) have been developed to help producers manage multiple tracks and enrich their music through intricate workflows.

Today, advances in technology are offering even more ways to enhance this process. From tools that classify sound libraries to those that generate brand-new material, musicians have access to cutting-edge capabilities. However, many recent innovations bypass the essential “layering” approach to music-making, generating fully composed pieces instead of individual components. This disconnects them from the reality of how modern artists work.

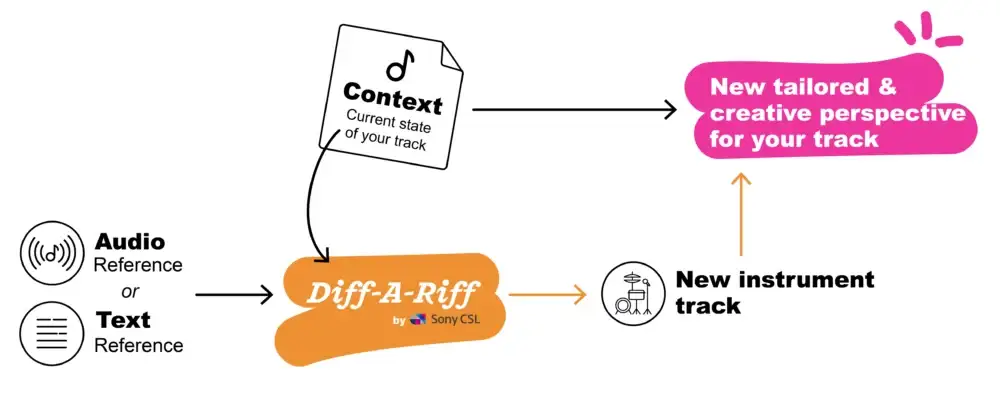

Introducing Diff-A-Riff: a new solution co-created by musicians and engineers to address this gap. Diff-A-Riff is an intuitive yet powerful tool designed specifically to generate instrument tracks that integrate into any music production. Our model combines a pre-trained Consistency Autoencoder (CAE) with a generative model, working within the framework of Elucidated Diffusion Models (EDMs) and based on DDPM++. The result? Diff-A-Riff can generate instrumental accompaniments that perfectly complement a given music piece.

One of its standout features is its flexibility: you can guide the generation with either a reference audio track or a simple text description.

How Does It Work?

Let’s break it down in simpler terms. Imagine you’re working on a track and need to add a new element. We’ll call your current project the “context.” Now, you might have a specific idea or “reference” for what this new element should sound like. Diff-A-Riff can take both the context and your reference (whether it’s an audio clip or a text prompt) and generate an unlimited number of tracks that fit right into your mix.

Its user-centric design makes it easy to integrate into a musician’s workflow. Artists can provide simple inputs—a track, an audio reference, or even a text description—and Diff-A-Riff will handle the rest, generating high-quality, contextually appropriate accompaniments. This new AI-model empowers musicians to focus on creativity, simplifying the production process without sacrificing artistic control.

A Rock ‘n’ Roll Example

In collaboration with music producer Carli Nistal, we used Diff-A-Riff to create a Rock ‘n’ Roll demo. We started with a simple loop as the context, and the tool generated multiple instrumental tracks including guitars, bass, Hammond organ, piano, and lead guitar. You can see Diff-A-Riff in action in a real-world production workflow in this video.

Diff-A-Riff in action: Rock’N’Roll Demo

Key Features of Diff-A-Riff

– Adaptability: Diff-A-Riff seamlessly integrates into any musical context, adapting to the artist’s unique style.

– Optional Controls: Use text prompts, audio references, an interpolation slider, pseudo-stereo width, and loop intensity for customization.

– High-Quality Audio: Generates audio at 48kHz sample rate, in (pseudo) stereo, with sound quality rated indistinguishable from real audio by human raters.

– Efficiency: Smaller and more efficient than previous models, thanks to the Consistency Autoencoder. It takes just 6 seconds to generate 90 seconds of music on a single GPU.

Diff-A-Riff even supports advanced features like audio inpainting, interpolation, controlled noise addition for variations, pseudo-stereo from mono signals, and loop sampling for repeated segments. These features open up new possibilities for musicians to experiment with their sound in ways previously unimaginable.

Diff-A-Riff in action: Disco Demo

Wrapping Up

To sum it up, Diff-A-Riff is a breakthrough tool that simplifies the music production process, making it easier for artists to generate instrumental tracks that align with their creative vision. It works seamlessly with modern workflows, generating individual instrument tracks that blend perfectly into your mix. Whether you’re guiding it with audio or text, Diff-A-Riff empowers you to create in new, exciting ways—without the need for extensive technical knowledge.

Our new AI-model has been made possible by our Music Researchers: Javier Nistal, Marco Pasini, Cyran Aouameur, Maarten Grachten, and Stefan Lattner.

Listen to more musical examples:

Reggae Demo

More details on Diff-A-Riff:

They are talking about Diff-A-Riff

Follow Sony CSL – Paris on social media for the latest updates on our other research projects & prototypes.